Choosing the right browser is crucial for your security, privacy, and overall experience on the web. This is especially important now that Chrome is dropping support for the popular uBlock Origin adblocker browser extension.

I have evaluated the security, privacy, extensibility, and ethical alignment of today’s most popular browsers in order to determine which is best, depending on your needs. Overall, I recommend Brave* for most people, as it has the best default settings favoring personal agency (i.e. minimal anti-features on by default) and meets my privacy and security expectations. If you need more extensibility, choose Firefox.

The contenders:

These browsers form the basis of our modern web infrastructure. However, none live up to an ideal standard of what browsers should be. I believe that we can do better.

* iOS users have no choice other than WebKit-based browser engines, such as Safari. Although Apple may technically allow third-party browser engines in the EU, this in not yet the case in practice due to Apple not allowing third-party browser engine developers to easily test their software outside of the EU.

Privacy: Brave wins

Brave and Safari block many trackers by default with their EasyPrivacy tracker blocklist integrations, which can help protect people from personally targeted advertising and discriminatory pricing based on their personal information. Safari’s tracker blocklist integration is limited to Private Browsing mode.

Chrome, Firefox, Safari, and Edge use network and device resources in order to help with ‘privacy-preserving’ measurement of ad effectiveness by default. This is unnecessary and only serves to further enrich advertisers at cost to the user.

Chrome and Edge turn it up a notch by tracking user behavior in-browser, sharing inferred interest topics with advertisers, and enabling these advertisers to abusively share data cross-site using third-party cookies, a concept supported in their default configuration.

Safari has caused systemic damage to privacy across the web ecosystem on all browsers through its cookie-preferential storage partitioning model, amplified by its iOS market dominance. Safari’s partitioning model incentivizes site owners to use cookies to synchronize data cross-subdomain, which unnecessarily leaks user data over the network. All other browsers also support synchronizing state cross-subdomain locally on-device, but many site owners don’t do this as it’s often much easier to implement a single solution for the common denominator.

Firefox and Edge make a decent attempt at baseline privacy with some tracker blocking features, but lack an EasyPrivacy-based tracker blocker enabled by default.

For a technical comparison of browser privacy behaviors, check out PrivacyTests.org.

Security: Chrome wins

In terms of technical competency and proclivity for security best practices, I believe that the teams behind Chrome, Safari, Firefox, Brave, and Edge all reach my minimum expectations.

Chrome was built around a multi-process architecture that was later adopted by other browsers, sandboxing each tab to prevent malicious code from affecting other tabs or the entire browser. Chrome also benefits from Google’s substantial resources dedicated to security research and frequent updates, ensuring that vulnerabilities are both promptly patched and proactively prevented. These measures make Chrome one of the most secure browsers available. Chrome beats Safari due to its faster release cycle, and both Brave and Edge by being closer to the source of fixes for most Chromium security issues.

Extensibility: Firefox wins

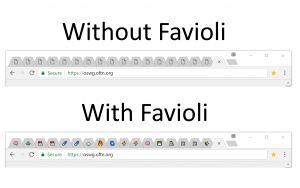

Chromium browsers such as Chrome, Edge, and Brave are transitioning to Manifest V3, and Google has decided to restrict certain APIs particularly impacting ad blockers and privacy tools. Google has deprecated the webRequestBlocking API supported by Manifest V2 and disallowed the upload of new Manifest V2 extensions to the Chrome Web Store. This reduces the capabilities of content-blocking extensions and has raised concerns among developers and privacy advocates, as it weakens users’ ability to block unwanted content (e.g. ads and trackers).

Safari extensions have similar limitations to Chromium browsers, and developers need to pay Apple $99 per year in order to distribute their extensions.

Firefox supports Manifest V3 while retaining the webRequestBlocking API, meaning that extensions can continue to use runtime logic to arbitrarily block content such as trackers and ads. This sets Firefox apart from other browsers as the most extensible overall.

Ethical alignment: Everyone loses

Brave offers an optional, purportedly attention-based, monetization scheme and skims 30% of the value. Fortunately, Brave only has one anti-feature enabled by default: dismissible advertisements to enable this monetization scheme.

Chrome, Edge, and Safari offer browser-level features that unjustly enrich advertisers at cost to the user in their default configuration.

Mozilla has acquired an adtech company that uses behaviorally targeted advertising without stating a clear commitment to end behavioral targeting. I believe that this goes against their own publicly-stated manifesto. Unlike contextual targeting, behavioral targeting can be abused much more easily for personalized advertising and discriminatory pricing. Contextual targeting is the practice of customizing content (e.g. ads) to be relevant to the site being viewed as opposed to the determinable characteristics of the person viewing the site.

What’s next

We need browsers that truly put users in control, and that means building them in the open, without the conflicts of interest that plague ad-driven giants. This is a call to action for everyone, from grassroots communities to philanthropic individuals and forward-thinking developers: let’s fund, create, and sustain new high-quality open source browsers. A truly open web can’t be dominated by a handful of companies who profit from surveillance and lock users into limited ecosystems.

One early in-development browser project is Ladybird, initially created within the open-source SerenityOS community and now developed by the non-profit Ladybird Browser Initiative. Instead of relying on established engines like Chromium or WebKit, it aims to build a new browser engine entirely from scratch, free from for-profit interests. Although still in the early stages, Ladybird demonstrates a commitment to transparency and user control. This model seeks to avoid the conflicts of interest often found in browser development and marks a promising direction for the future of browsing.

Another browser project worth mentioning is the Servo browser engine and the Verso browser powered by Servo. Servo was initially developed by Mozilla, and is now developed by the Servo Project Developers. Servo is intended to be a lightweight, high-performance, and secure browser engine framework. Servo is written in the Rust programming language which has improved memory safety properties and concurrency features over many existing languages used to develop browser engines.